Introduction

I have a somewhat unique opportunity in this writeup to highlight my experience as an iOS research newcomer. Many high quality iOS kernel exploitation writeups have been published, but those often feature weaker initial primitives combined with lots of cleverness, so it’s hard to tell which iOS internals were specific to the exploit and which are generic techniques.

In this post, we’ll look at CVE-2019-8605, a vulnerability in the iOS kernel and macOS for five years and how to exploit it to achieve arbitrary kernel read/write. This issue affected XNU as early as 2013, and was reported by me to Apple on March 2019. It was then patched in iOS 12.3 in May 2019 and I released the complete details including the exploit for iOS for analysis, named “SockPuppet,” in July 2019. It was then discovered that this issue regressed in iOS 12.4 and was later patched in iOS 12.4.1 in late August 2019.

The primitive in SockPuppet is stronger than usual: it offers an arbitrary read and free with very little work. This makes it easier to see what a canonical iOS exploit looks like since we can skip over the usual work to set up strong initial primitives. I’ll begin by describing how I found my bug, and then explain how I exploited it given only a background in Linux and Windows exploitation. If you’re interested, I’ve collaborated with LiveOverflow to make a video explaining this bug. You can watch it here.

Bug Hunting

Why network fuzzing?

One technique for choosing fuzz targets is enumerating previous vulnerability reports for a given project, finding the bug locations in the source tree, and then picking up a component of the project that is self-contained and contains a diverse subset of the bugs. Then by creating a fuzzer which is fairly generic but can still reproduce the previous finds, you are likely to find new ones. When I started to work on my fuzzer, I used two bug reports to seed my research: an mptcp_usr_connectx buffer overflow by Ian Beer of Google Project Zero and an ICMP packet parsing buffer overflow by Kevin Backhouse of Semmle. What made these perfect candidates was that they were critical security issues in completely different parts of the same subsystem: one in the network-related syscalls and one in parsing of remote packets. If I could make a fuzzer that would make random network-related syscalls and feed random packets into the IP layer, I might be able to reproduce these bugs and find new ones. Those past bugs were discovered using code auditing and static analysis, respectively. As someone who primarily uses fuzzing to find memory corruption vulnerabilities, these are highly useful artifacts for me to study, since they come from some of the best practitioners of auditing and static analysis in the industry. In case I failed to reproduce the bugs or find any new ones, it would at least be an educational project for me. Success would validate that my approach was at least as good as the approaches originally used to discover these bugs. Failure would be an example of a gap in my approach.

The first draft of the fuzzer went off without a hitch: it found Ian’s and Kevin’s bugs with actionable ASAN reports. Even better, for the ICMP buffer overflow it crashed exactly on the line that Ian described in his email to Kevin as described on Semmle’s blog. When I saw how accurate and effective this was, I started to get really excited. Even better, my fuzzer went on to find a variant of the ICMP bug that I didn’t see mentioned publicly, but was fortunately addressed in Apple’s thorough patch for the vulnerability.

From Protobuf to PoC

The exact details of how the fuzzer works will be described in a future post, but some context is necessary to understand how this specific bug was found. At a high level, the fuzzer’s design is a lot like that of syzkaller. It uses a protobuf-based grammar to encode network-related syscalls with the types of their arguments. On each fuzzer iteration, it does a sequence of random syscalls, interleaving (as a pseudo-syscall) the arrival of random packets at the network layer.

For example, the syscall to open a socket is int socket(int domain, int type, int protocol). The protobuf message representing this syscall and its arguments is:

LibFuzzer and protobuf-mutator work together to generate and mutate protobuf messages using the format I defined. Then I consume these messages and call the real C implementation. The fuzzer might generate the following protobuf message as part of the sequence of messages representing syscalls:

In the loop over input syscall messages, I call the syscall appropriately based on the message type:

Here, you can see some of the light manual work that is involved: I keep track of open file descriptors by hand, so I can be sure to close them at the end of one fuzzer iteration.

The fuzzer started out by simply encoding all the network-related syscalls into messages that had the correct types for each argument. To improve coverage, I refined the grammar and made changes to the code under test. Because there is so much code to cover, the most efficient way to find bugs is to identify suspicious-looking code manually by auditing. Given our fuzzing infrastructure, we can look at the coverage metrics to understand how well-tested some suspicious code is and tweak the fuzzer to uniformly exercise desired states. That may be at a higher level of abstraction than code coverage alone, but coverage will still help you identify if and how often a certain state is reached.

Now let’s see how refining the fuzz grammar led us from a low-quality crash to a clean and highly exploitable PoC. The testcase triggering the first crash for CVE-2019-8605 only affected raw sockets, and was therefore root only. Here’s the reproducer I submitted to Apple:

As this C reproducer was modelled directly after the protobuf testcase, you can see how my early grammar had lots of precision for sockaddr structures. But setsockopt was horribly underspecified: it just took 2 integers and a random buffer of data. Fortunately, that was enough for us to guess 41 (IPPROTO_IPV6) and 50 (IPV6_3542RTHDR), correctly setting an IPv6 output option.

Looking at the ASAN report for the use after free, we see the following stack trace for the free:

Looking at the function that actually calls free, we see the following:

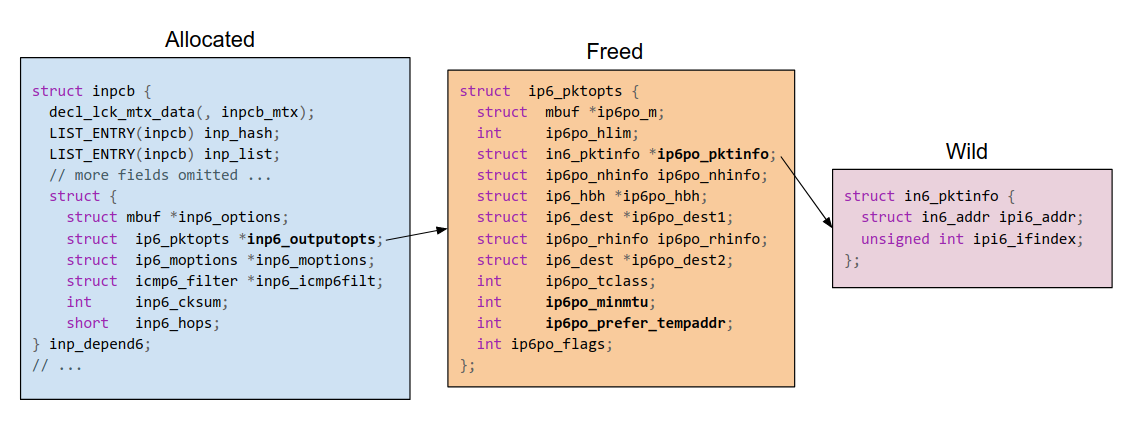

The call to ip6_freepcbopts is the culprit here. In my fuzzer build this function was inlined into ipc6_pcbdetach, which explains the backtrace we saw in the bug report. As you can see, the developers intended for the socket options to be reused in some cases by NULLing out each pointer after it was freed. But because the in6p_outputopts are represented by a pointer to another struct, they are freed by the helper function in6_freepcbopts. That function does not know the address of inp, so it cannot clear &inp->in6p_outputopts, as we can see the code in this snippet neglects to do. This bug does look straightforward upon inspection, but the ROUTE_RELEASE on the following line, for example, is safe because it’s modifying the in6p_route stored inline in the inp and correctly NULLing pointers. Older XNU revisions didn’t NULL anything, and either they were all buggy or this code just wasn’t originally designed to account for reuse of the socket.

The freed buffer was created by a call to setsockopt. This is a hint that we might be able to keep accessing the freed buffer with more calls to getsockopt and setsockopt, which would represent read and write primitives respectively. The initial testcase looked like a really specific edge case in raw sockets, so I figured it wasn’t easily exploitable. Whenever I report a bug, I will create a local patch for it to avoid hitting it again in subsequent fuzzing. But because I wanted to find more variants of it, I just disabled raw sockets in my fuzzer with a one line enum change and left the bug intact.

This would prove to be the right idea. I quickly found a new variant that let you read the use-after-free data using getsockopt via a TCP socket, so it worked inside the iOS app sandbox. Awesome! After some quick trial and error I saw that setsockopt wouldn’t work for sockets that have been disconnected. But letting the fuzzer continue to search for a workaround for me was free, so again I worked around the unexploitable testcase and left the bug intact by adding a workaround specifically for the getsockopt case:

By this point I realized that setsockopt was an important source of complexity and bugs. I updated the grammar to better model the syscall by confining the name argument for setsockopt to be only valid values selected from an enum. You can see the change below, where SocketOptName enum now specifies a variety of real option names from the SO, TCP, IPV6, and other levels.

These changes were the critical ones that led to the highly exploitable testcase. By allowing the fuzzer to explore the setsockopt space much more efficiently, it wasn’t long before it synthesized a testcase that wrote to the freed buffer. When I looked at the crashing input, was stunned to see this in the decoded protobuf data:

What is that SO_NP_EXTENSIONS option? And why did inserting this syscall into the testcase turn it from a memory disclosure into an exploitable memory corruption? Quickly skimming through the SO_NP_EXTENSIONS handling in XNU I realized that we were hitting this:

I think every vulnerability researcher can relate to the moment when they realize they have a great bug. This was that moment for me; that comment described the exact scenario I needed to turn my use-after-free-read into a use-after-free-write. Transcribing the full testcase to C yields the following:

In effect, the fuzzer managed to guess the following syscall:

I was surprised to see this because I completely expected the use after free to be triggered in another way. What’s really cool about combining grammar based fuzzing with coverage feedback is that specifying the enums that represent the level and name options along with a raw buffer for the “val” field was enough to find this option and set it correctly. This meant the fuzzer guessed the length (8) of val and the data representing SONPX_SETOPTSHUT (low bit set for each little-endian dword). We can infer that the fuzzer tried the SO_NP_EXTENSIONS option many times before discovering that a length of 8 was notable in terms of additional coverage. Then this set_sock_opt message was propagated throughout the corpus as it was mixed with other relevant testcases, including the one that triggered my original bug. Then ensuring two bits in val were set was just a 1 in 4 guess. The same setsockopt call that setup the buggy state was called again to trigger the use after free, which was another shallow mutation made by the protobuf-mutator, just cloning one member of the syscall sequence. Writing effective fuzzers involves a lot of thinking about probability, and you can see how by giving the fuzzer manually-defined structure in just the right places, it managed to explore at an abstraction level that found a great PoC for this bug.

I hope you enjoyed this insight into the bug hunting process. For more background about getting started with this fuzzing approach, take a look at syzkaller and this tutorial.

Exploitation

How use after free works

The exploit I’m about to describe uses a single use after free bug to get a safe and reliable arbitrary read, defeat ASLR, do an arbitrary free, and ultimately allow us to build an arbitrary read/write mechanism. That’s a lot of responsibility for one bug, so it’s worth giving a little background into how use-after-frees work for readers who have never exploited one before. I vividly remember when I read a post by Chris Evans right here on this blog, called “What is a ‘good’ memory corruption vulnerability?” When I downloaded and ran Chris’s canonical use after free demonstration, and the “exploit” worked the first try on my laptop, I was instantly struck by the simplicity of it. Since then, I’ve written several real world use after free exploits, and they all stem from the same insight: it’s much easier than you would think to reclaim a freed buffer with controlled data. As long as a buffer is not allocated from a specialized pool (PartitionAlloc, a slab heap, etc.), objects of approximately the same size, i.e., in the same size class, will be mixed between different callers of malloc and free. If you can cause arbitrary allocations that are the same size from your freed object’s size class you can be pretty sure that you will reclaim the freed data quickly. This is by design: if memory allocators did not behave this way, applications would lose performance by not reusing cache lines from recently freed allocations. And if you can tell whether or not you succeeded in reclaiming your freed buffer, exploitation is almost deterministic. So then, what makes a good UaF bug? If you can control when you free, when you use the freed allocation, and can safely check whether you’ve reclaimed it (or can massage the heap to make reclaiming deterministic), exploitation will be straightforward. This is at least how a CTF teammate explained it to me, and it still holds today against real targets. The bug we are looking at in this post is one of those bugs, and for that reason, it’s about as “nice” as memory corruption gets.

Bootstrapping better primitives

Generally the end goal of binary exploitation is to get arbitrary code execution, sometimes referred to as “shellcode” when that arbitrary code spawns a shell for you on the target system. For iOS, the situation is slightly more complicated with the addition of PAC, which introduces a security boundary between kernel memory R/W and kernel code execution. This means our bug will serve as an entrypoint to get kernel memory R/W using a data-based attack, with code execution left to another layer of exploitation.

To start the exploit, I thought it would be interesting to see what primitives I could build without knowing Mach specifics. Mach and BSD are the Yin and Yang of XNU, representing a dual view of many fundamental kernel objects. For example, a process is represented twice in the kernel: once as a Mach task and once as a BSD proc. My bug occurs in the BSD half, and most exploits end up getting control of a highly privileged Mach port. This means we’ll need to figure out how to manipulate Mach data structures starting from our corruption on the BSD side. At this point I was still only familiar with the BSD part of the kernel, so I started my research there.

Here’s the inpcb containing the dangling inp6_outputopts pointer:

Looking at the getters and setters for these options via [get/set]sockopt, we quickly see that fetching the integers for the minmtu and the prefer_tempaddr fields is straightforward and will let us read data directly out of the freed buffer. We can also freely read 20 bytes from the in6po_pktinfo pointer if we manage to reclaim it. Take a look at this snippet from the ip6_getpcbopt implementation yourself:

ip6po_minmtu and ip6po_prefer_tempaddr are adjacent to each other and qword-aligned so if we manage to reclaim this freed struct with some other object containing a pointer we will be able to read out the pointer and defeat ASLR. We can also take advantage of these fields by using them as an oracle for heap spray success. We spray objects containing an arbitrary pointer value we choose at a location that overlaps the in6po_pktinfo field and a magic value in the mintmu field. This way we can repeatedly read out the minmtu field, so if we see our magic value we know it is safe to dereference the pointer in in6po_pktinfo. It is generally safe to read the inp6_outputopts because we know it is already mapped, but not in6po_pktinfo as it could have been reclaimed by some other garbage that points to unmapped or unreadable memory. Before we talk about which object we spray to leak a pointer and how to spray arbitrary data, let’s quickly figure out what primitive we can build from the setsockopt corruption.

Unfortunately, the setsockopt path, unlike the getsockopt path, is not as easy to use as it first appears. Most of the relevant options are root only or are highly constrained. This still leaves IPV6_2292PKTINFO/IPV6_PKTINFO as the best option, but in testing and reading the code it appeared impossible to write anything but highly constrained values there. The ipi6_addr field, which looks perfect for writing arbitrary data, must be set to 0 to pass a check that it is unspecified. And the interface index has to be valid, which constrains us to low values. If the interface is 0, it frees the options. This means we can only write 16 null bytes plus a small non-zero 4 byte integer anywhere in memory. That’s certainly enough for exploitation, but what about the free case? As long as you pass in a pktinfo struct containing 20 null bytes, ip6_setpktopt will call ip6_clearpktopts for you, which finally calls FREE(pktopt->ip6po_pktinfo, M_IP6OPT). Remember, in6po_pktinfo is our controlled pointer, so this means we have an arbitrary free. Even better, it’s a bare free, meaning we can free any object without knowing its zone. That’s because FREE is a wrapper for kfree_addr, which looks up the zone on your behalf. To keep late stage exploitation generic, I opted for the arbitrary free primitive over the constrained write primitive.

Implementing and testing the heap spray

Now that we have an attack plan, it’s just a matter of figuring out a way to spray the heap with controlled data. Fortunately for us, there is already a well-known way to do this via IOSurface, and even better, Brandon Azad (@_bazad) already had some code to do it! After some debugging and integration into my exploit, I had a working “stage 1” abstraction that could read and free an arbitrary address, by reclaiming and checking the minmtu magic value as described above. This IOSurface technique was used as early as 2016 as part of an in-the-wild exploit chain for 10.0.1-10.1.1.

When testing on different iOS devices and versions, I found that spray behavior was different. What was fast and reliable on one device was unreliable on another. Fortunately, improvements for one device generally benefited all platforms and versions, so I didn’t need to worry about maintaining multiple spray patterns per-platform. Some of the parameters involved here are the number of objects to spray per attempt, how many times to retry, and the order in which to make allocations of both the sprayed and (use-after-)freed socket options. Understanding heap allocator internals across versions and devices would be ideal, but I found experimentation was sufficient for my purposes. This is a CTF insight; I used to solve Linux heap problems by reading glibc and carefully planning out an exploit on paper. A couple years later, the popular approach had shifted (at least for me) to using tools to inspect the state of the heap, and iterating quickly to check how high level modifications to the exploit would change the heap layout. Of course, I didn’t have such tooling on iOS. But by checking the minmtu value, I did have a safe oracle to test spray performance and reliability, so it was quick to iterate by hand. When iOS 12.4 regressed and reintroduced this vulnerability, I tested the exploit against an iPhone XR and found that the spray failed often. But after changing the order in which I did sprays (creating a new dangling pointer after each spray attempt, instead of all at once in the beginning), success became quick and reliable again. I have no doubt that keeping a good understanding of the internals is superior, but treating this like an experimental black box is pretty fun.

What makes the SockPuppet exploit fast? Other exploits often rely on garbage collection in order to get their freed object reallocated across a zone. Because all of the objects I used were in the same generic size-based zone, I needed fewer allocations to succeed, and I didn’t have to trigger and wait for garbage collection.

Learning about tfp0

At this point, I have stretched the initial bug to its limits, and that has given me an arbitrary read and an arbitrary free. With this we can now create a new use after free where there was never a bug in the original code. I took a look around for any cute shallow tricks that others might have overlooked in the BSD part of the kernel tree before accepting that the Mach exploitation path offers some nice facilities for kernel exploitation, and so it was time to learn it.

If you follow iOS kernel exploitation even casually, you’ve probably heard of “tfp0.” So what is it exactly? It’s a short name for task_for_pid, which returns to you a Mach port with a send right to the task with the given pid. When you call it with pid 0, this gives you the kernel task port. A port is one of the fundamental primitives of Mach. It’s like a file descriptor that is used to represent message queues. Every such message queue in the kernel has one receiver, and potentially multiple senders. Given a port name, such as the one returned by task_for_pid, you can send or receive a Mach message to that queue, depending on what rights you have to access it. The kernel_task is like any other task in Mach in that it exposes a task port.

What’s so great about getting access to the kernel task port? Just take a look at osfmk/mach/mach_vm.defs in the XNU sources. It has calls like mach_vm_allocate, mach_vm_deallocate, mach_vm_protect, and mach_vm_read_overwrite. If we have a send right to a task port, we can read, write, and allocate memory in that process. XNU supports this abstraction for the kernel_task, which means you can use this clean API to manipulate memory in the kernel’s address space. I couldn’t help but feel that every iPhone has this “cheat” menu inside of it, and you have to pass a serious test of your skills to unlock it. You can see why this is so appealing for exploitation, and why I was so excited to try to get ahold of it! Of course, we can’t just call task_for_pid(0) from our unprivileged sandboxed app. But if we can implement this function call in terms of our memory corruption primitives, we’ll be able to pretend we did!

To understand what we need to do to simulate a legitimate tfp0 call, let’s look at how a message we send from our task to another task (perhaps kernel_task) actually looks, starting from the port name (file descriptor equivalent) in userland all the way to message delivery.

Let’s start by taking a look at the struct representing a message header:

I’ve labeled the important fields above. msgh_remote_port contains the destination port name, which will be the kernel task port name if we have access to it. The msgh_bits specify a number of flags, one of them being the “disposition” of the message we’re sending for the different port names. If we have the send right to the kernel task port, for example, we’ll set msgh_bits to tell the kernel to copy the send right we have in our IPC space to the message. If this sounds tricky, don’t worry. The main thing to keep in mind is that we name the destination of the message in the header, and we also mark how we want to use the capability we have for it stored in our IPC namespace (mach file descriptor table).

When we want to send a message from userland, we do a mach trap, the mach equivalent of a syscall, called mach_msg_overwrite_trap. Let’s look at the MACH_SEND_MSG case and follow along, so we find out what we’ll need to arrange in kernel memory for tfp0:

If we want to deliver a message to the kernel task port, we just need to understand how ipc_kmsg_get, ipc_kmsg_copyin, and ipc_kmsg_send work. ipc_kmsg_get simply copies the message from the calling task’s address space into kernel memory. ipc_kmsg_copyin actually does interesting work. Let’s see how it ingests the message header through a call to ipc_kmsg_copyin_header.

ipc_kmsg_copyin_header serves to convert the remote port name into the port object, updating the msg->msgh_remote_port to point to the actual object instead of storing the task-specific name. This is the BSD/Linux equivalent of converting a file descriptor into the actual kernel structure that it refers to. The message header has several name fields, but I’ve simplified the code to highlight the destination case, since we’ll want the kernel_task port to be our destination port. The ipc_space_t space argument represents the IPC space for the current running task, which is the Mach equivalent of the file descriptor table. First, we lookup the dest_name in the IPC space to get the ipc_entry_t representing it. Every ipc_entry_t has a field called ie_bits which contains the permissions our task has to interact with the port in question. Here’s what the IPC entry struct looks like:

Remember that the header of the message we sent has a “disposition” for the destination which describes what we want our message to do with the capability we have for the remote port name. Here’s where that actually gets validated and consumed:

Here, I’ve reproduced the code for the MACH_MSG_TYPE_COPY_SEND case. You can see where ie_bits from the IPC entry is used to check the permission we have. If we want to take advantage of the send right in this message, we can copy the right to the message, and this code checks that we have the right in ie_bits before updating the relevant reference counts and finally giving us access to the port object to which we can enqueue messages. If we don’t have the proper permissions according to entry->ie_bits, the attempt to send the message will fail.

Now that our message is copied in, validated, and updated to contain real kernel object pointers, ipc_kmsg_send goes ahead and just adds our message to the destination queue:

As you can see above, if the destination port’s ip_receiver is the kernel IPC space, ipc_kobject_server is called as a special case to handle the kernel message. The kernel task port has the kernel IPC space as its ip_receiver, so we’ll make sure to replicate that when we are arranging for tfp0.

Whew, that was a lot! Now that we see the essentials behind message sending, we are ready to envision our goal state, i.e., how we want kernel memory to look as if we had called tfp0 successfully. We’ll want to add an IPC entry to our IPC space, with ie_object pointing to the kernel task port, and ie_bits indicating that we have a send right. Here’s how this looks:

The green nodes above represent all the data structures that are part of our current task which is running the exploit. The blue node is a fake IPC port that we’ll set up to point to the kernel task and the kernel task’s IPC table. Remember the ie_bits field specifies the permissions we have to interact with the ie_object, so we’ll want to make sure we have a send right to it specified there.

Defeating ASLR and faking data structures

IPC systems generally need a way to serialize file descriptors and send them over a pipe, and the kernel needs to understand this convention to do the proper accounting. Mach is no exception. Mach ports, like file descriptors, can be sent by one process to another with send rights attached. You can send an out of line port from one process to another using a special message that contains a mach_msg_ool_descriptor_t. If you’d like to send multiple ports in a single message, you can send mach_msg_ool_ports_descriptor_t, an array of ports stored out of line (OOL), meaning outside of the message header itself. We, like many others, will be using the OOL ports descriptor in our exploit.

What makes the OOL ports array so useful is that you completely control the size of the array. When you pass in an array of mach port names, the kernel will allocate space for an arbitrary number of pointers, each of which is filled with a pointer to the ipc_port structure that we want to send. In case you didn’t notice, we can use this trick as an ASLR bypass as we can overlap an OOL descriptor array of port pointers with the freed buffer of size 192, and simply read the two adjacent int fields from the freed struct via getsockopt. At this point we can start to traverse the kernel data structures with our arbitrary read.

Many exploits turn a corruption bug into a read primitive. We have the rare privilege of having a reliable read primitive before we do any corruption, so we use that combined with this pointer disclosure to leak all the relevant pointers to complete the exploit, including setting up crafted data at a known address. We go ahead and do all the necessary traversal now as you can see below.

The green nodes above represent the seed values for our exploration, and the orange nodes represent the values we’re trying to find. By spraying a message with an OOL port descriptor array containing pointers to ipc_port structs representing our host port, we find its ipc_port which will give us ipc_space_kernel via the receiver field.

We repeat the same initial trick to find the ipc_port for our own task. From there we find our task’s file descriptor table and use this to find a vtable for socket options and a pipe buffer. The vtable will give us pointers into the kernelcache binary. Because the kernel process’s BSD representation kernproc is allocated globally in bsd/kern/bsd_init.c, we can use a known offset from the socketops table to find it and lookup the address of kernel_task.

The pipe buffer is created by a call to the pipe() syscall, and it allocates a buffer that we can write to and read from via a file descriptor. This is a well known trick for getting known data at a known address. In order to make the fake ipc_port that we’ll inject into our IPC space, we create a pipe and send data to it. The pipe stores queued data into a buffer on the kernel heap, allocated via the generic size-based zones. We can read and write to that buffer repeatedly from userspace by reading and writing to the relevant pipe file descriptors, and that data is stored in kernel memory. By knowing the address of the buffer for our pipe, we can store controlled data there and create pointers to it. We’ll need that to make a crafted ipc_port for the kernel task.

So we can now create our fake ipc_port and point it to the kernel_task and the ipc_space_kernel, right? I should point out now that even if we could call task_for_pid(0) and obtain a kernel_task port, we wouldn’t be able to send messages to it. Any userland task that tries to send a message to the kernel_task will be blocked from doing so when the kernel turns an ipc_port for a task into the task struct. This is implemented in task_conversion_eval:

I use the trick that many others have used, and simply created a copy of the kernel_task object so the pointer comparison they use won’t detect that I’m sending a message to the fake kernel_task object. It doesn’t matter that it’s not the real kernel_task because it’s simple to support the mach_vm_* functions with a fake kernel_task; we simply need to copy the kernel’s kernel_map and initialize a few other fields. You can see in the diagram above that we can simply pull that from the kernel_task, whose address we already know. We’ll store the fake kernel task adjacent to our fake ipc_port in the pipe buffer. For an example of this approach being used in the wild, see this exploit writeup from Ian Beer on the team.

Injecting our kernel_task port

We’re now going to use the OOL port descriptor array for another purpose. We send a message to ourselves containing an OOL array containing copies of our task port name, which we have the send right to. The send right validation happens initially when the message is sent, so if we edit the array while it’s waiting to be delivered, we can overwrite one of the ipc_ports to point to our fake kernel_task ipc_port. This trick is adapted from Stefan Esser’s excellent presentation on the subject, and has been used in several exploits. Note that an ipc_port has no notion itself of a send or receive right; those rights are tracked as part of the ipc_entry and are handled outside of the ipc_port. This makes sense, because a port encapsulates a given message queue. The rights to send or receive to that queue are specific to each process, so we can see why that information is stored in each process’s table independently.

Even though this trick of overwriting a pointer in an OOL port descriptor array is a known exploit technique, it’s up to the exploit developer to figure out how to actually make this corruption happen. We have an arbitrary read and arbitrary free. OOL port descriptor arrays and pipe buffers are allocated out of the global zone. We can combine these facts! Earlier we noted down the address of our pipe buffer. So we just free the pipe buffer’s actual buffer address and spray OOL port descriptor arrays. We then read the pipe buffer looking for our task’s ipc_port, overwriting it with the pointer to our fake port. Then we deliver the message to ourselves and check whether we managed to inject the fake kernel task port.

At this point, we have tfp0. Like voucher_swap and other exploits, we want to use this temporary tfp0 using pipe buffer structures to bootstrap a more stable tfp0. We do this by using the kernel task port to allocate a page of kernel memory dedicated to storing our data, and then using the write primitive to write our fake task port and kernel_task there. We then change our IPC space entry to point to this new ipc_port.

We still have a pipe structure with a dangling pointer to a freed buffer. We don’t want it to double-free when we close the fd, so we use our new stable tfp0 powers to null out that pointer. We essentially did two actions to corrupt memory: free that pointer, and use the new use-after-free pipe buffer to overwrite a single ipc_port pointer, so keeping track of cleanup is fairly straightforward.

Evaluating PAC and MTE

Because this exploit is based on a memory corruption bug, there’s a lingering question of how it is affected by different mitigations. With the A12 chip, Apple brought PAC (Pointer Authentication) to iOS, which appears to be designed to limit kernel code execution assuming arbitrary kernel read/write among other goals. This sounds like a pretty strong mitigation, and without any real experience I wasn’t sure how exploitation would fare. I was testing on an A9 chip, so I just hoped I wouldn’t do anything in my exploit that would turn out to be mitigated by PAC. This was the case. Because my exploit only targeted data structures and did not involve arbitrary code execution, there were no code pointers to forge.

iOS 13 is beginning to introduce protections for some data pointers, so it is worthwhile to examine which pointers I would need to forge for this exploit to have worked in the context of data PAC. PAC protects return addresses on the stack from corruption by signing them with a private key and the location of the pointer itself on the stack as a context value. However, other code pointers are signed without a context value. Similarly, the effectiveness of data PAC will likely depend on how Apple chooses to use context values.

Let’s consider the situation where all data pointers are protected but not signed with a context based on location. In this scenario we can copy them from one location to another so long as we manage to leak them. This is better known as a “pointer substitution attack,” and has been described by Brandon in his blog post about PAC.

Our read primitive remains effective in the context of data PAC since our dangling pointer is still signed. There are several attacker-sourced pointers we ultimately need to either forge or substitute: ipc_space_kernel, kernel_map, &fake_port, and &fake_task, along with all the intermediate reads needed to find them. Recall that the &fake_port and &fake_task are pointers to pipe buffers. For our initial entrypoint, It doesn’t matter if the pktinfo pointer is protected, because we have to leak a real ipc_port pointer anyways via the OOL ports spray. This means we can collect a signed ipc_port, and do all of the up front data structure traversals we do already, copying the PAC data pointers without a problem. ipc_space_kernel and kernel_map are already signed, and if pipe buffers are signed we can simply split the fake port and task across two pipe buffers and obtain a signed pointer to each buffer. In any case, the exploit would not work completely out of the box, because we do forge a pointer into the file descriptor table to lookup arbitrary fd structures and some lookups may require reading more than 20 bytes of data. However, I’m confident that the read primitive is powerful enough to work around these gaps without significant effort.

In practice iOS 13 only protects some data pointers, which paradoxically might improve end user security. For example, if pipe buffers are unprotected, simply leaking the address of one is unlikely to let us use that pointer to represent a fake ipc_port if pointers to ports are signed. An examination of the kernel cache for 17B5068e revealed that IPC port pointers are indeed not protected, but I do think they plan to do so (or already do so in non-beta builds) according to Apple’s BlackHat talk earlier this year. Like any mitigation combined with a bug providing strong initial primitives, it’s just a matter of designing alternative exploit techniques. Without considering the whack-a-mole of which pointers should be protected or unprotected, I’m hoping that in the future, as many pointers as possible are signed with the location as a context to help mitigate the effect of pointer substitution attacks. As we can see from our thought experiment, there isn’t much to be gained with a good use-after-free based read primitive if data pointers are simply signed with a context of 0.

The other mitigation to consider is the Memory Tagging Extension (MTE) for ARM, an upcoming CPU feature that I believe that Apple will try to implement. There’s a nice high level summary for this mitigation here and here. In essence, memory allocations will be assigned a random tag by the memory allocator that will be part of the upper unused bits of the pointer, like in PAC. The correct tag value will be stored out of line, similar to how ASAN stores heap metadata out of line. When the processor goes to dereference the pointer, it will check if the tag matches. This vulnerability would have been mitigated by MTE, because we trigger the use after free many times in the exploit, and every time the freed pointer would be accessed, its tag would be compared against the new tag for the freed range or that of whichever allocation reclaimed the buffer. Depending on what the CPU or kernel is configured to do when a mismatching tag is identified will affect how an exploit will proceed. I would expect that Apple configure either a synchronous or asynchronous exception to occur during tag check failure, considering they make an effort to trigger data aborts for PAC violations according to their LLVM documentation for PAC: “While ARMv8.3's aut* instructions do not themselves trap on failure, the compiler only ever emits them in sequences that will trap.”

Using corrupted code pointers forged by an attacker is a rare occurrence, but invalid heap accesses happen very often in real code. I suspect many bugs will be identified using MTE, and look forward to seeing its use in the iPhone. If it is combined with the current PAC implementation, it will be a huge boost to security for end users.

The iOS Exploit Meta

I found it interesting to see which techniques I used are part of the iOS exploit “meta,” that is, the tricks that are used often in public exploits and those seen in the wild. These were all the techniques I came across and how and if I incorporated them into the exploit. As mentioned earlier, for the closest publicly documented variant of the approach I used, see Stefan Esser’s presentation on the topic, which seems to be the first to use this basket of techniques.

A bug’s life

When testing my exploit on older phones, I noticed that my 32-bit iPhone 5 was still running iOS 9.2. Out of curiosity I tested the highly exploitable disconnectx PoC that permits corrupting memory via the freed buffer and was shocked to see that the PoC worked right away. The kernel panicked when accessing freed memory (0xDEADBEEF was present in one of the registers when the crash occurred). After some more testing, I found that the PoC worked on the first XNU version where disconnectx was introduced: the Mavericks kernel included with the release of macOS 10.9.0. The iOS 7 beta 1 kernel came soon after Mavericks, so it’s likely that iOS 7 beta 1 until iOS 12.2/12.4 was affected by this bug. September 18, 2013 was the official release date of iOS 7, so it appears that macOS and iOS users were broadly affected by this vulnerability for over 5 years.

Conclusion

It is somewhat surprising that a bug with such a strong initial primitive was present in iOS for as long as it was. I had been following public iOS security research since the iPhone’s inception and it wasn’t until recently that I realized I might be capable of finding a bug in the kernel myself. When I read exploit writeups during the iOS 7 era, I saw that there were large chains of logic bugs combined with memory corruption. But I knew from my work on Chrome that fuzzing tools have become so effective recently that memory corruption bugs in attack surfaces that were thought to be well audited could be discovered again. We can see that this is true for iOS (as much as it is for other platforms like Chrome that were thought to be very difficult to break), that single bugs that are sufficient for privilege escalation existed even during the time when large chains were used. Attacker-side memory corruption research is too easy now: we need MTE or other dynamic checks to start making a dent in this problem.

I’d like to give credit to @_bazad for his patience with my questions about Mach. SockPuppet was heavily inspired by his voucher_swap exploit, which was in turn inspired by many exploit techniques that came before it. It is really a testament to the strength of some exploitation tricks that they appear in so many exploits. With PAC protection for key data pointers, we may see the meta shift again as the dominant approach of injecting fake task ports is on Apple’s radar, and mitigations against it are arriving.

Finally, if Apple made XNU sources available more often, ideally per-commit, I could have automated merging my fuzzer against the sources and we could have caught the iOS 12.4 regression immediately. Chromium and OSS-Fuzz already have success with this model. A fuzzer I submitted to Chrome’s fuzzer program that only found 3 bugs initially, has now found 95 stability and security regressions since submission. By opening the sources more frequently to the public, we have the opportunity to catch, by an order of magnitude, more critical bugs before they even make it to beta.

No comments:

Post a Comment